Automatic Modulation Recognition Across SNR Variability Via Domain Adversary

Introduction

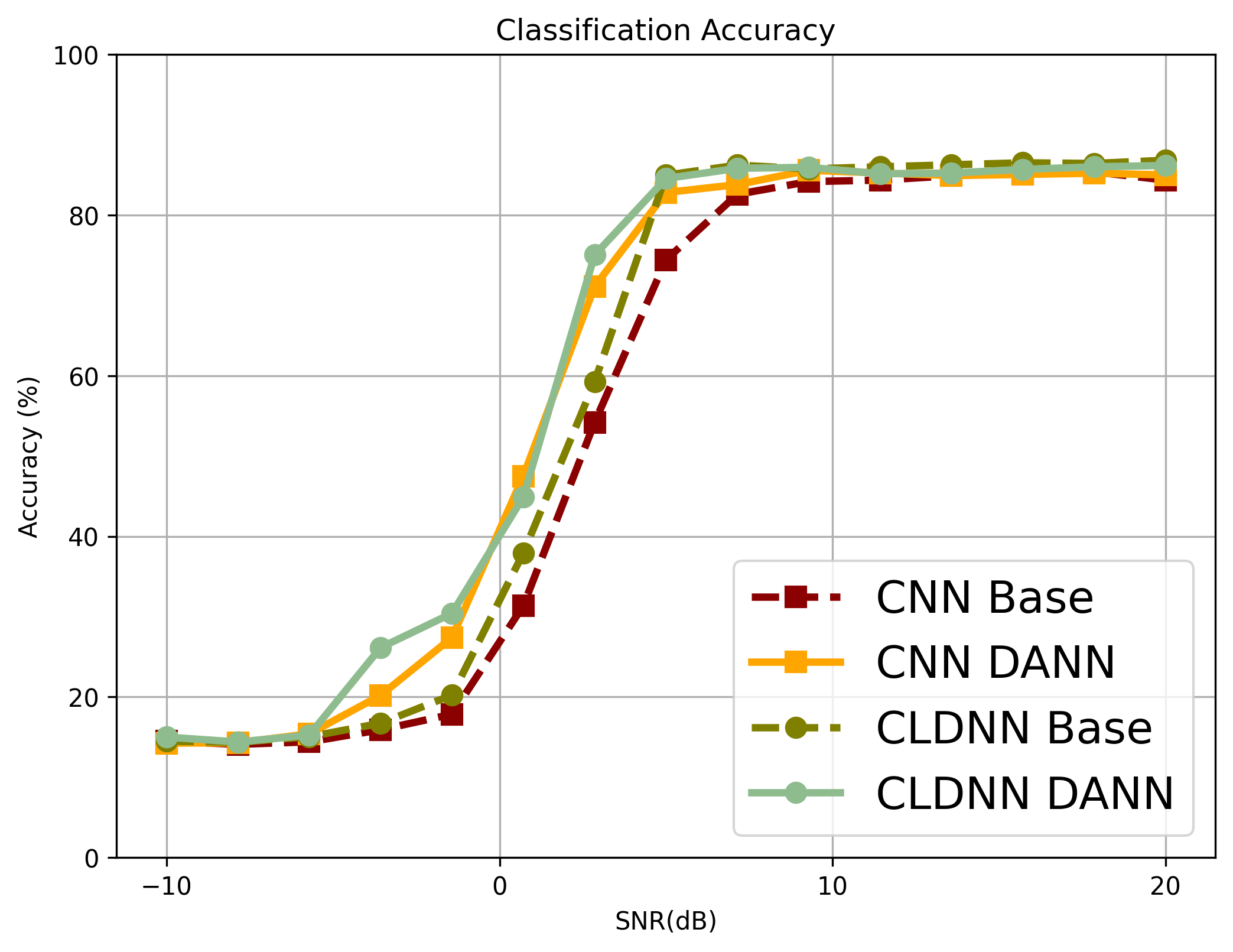

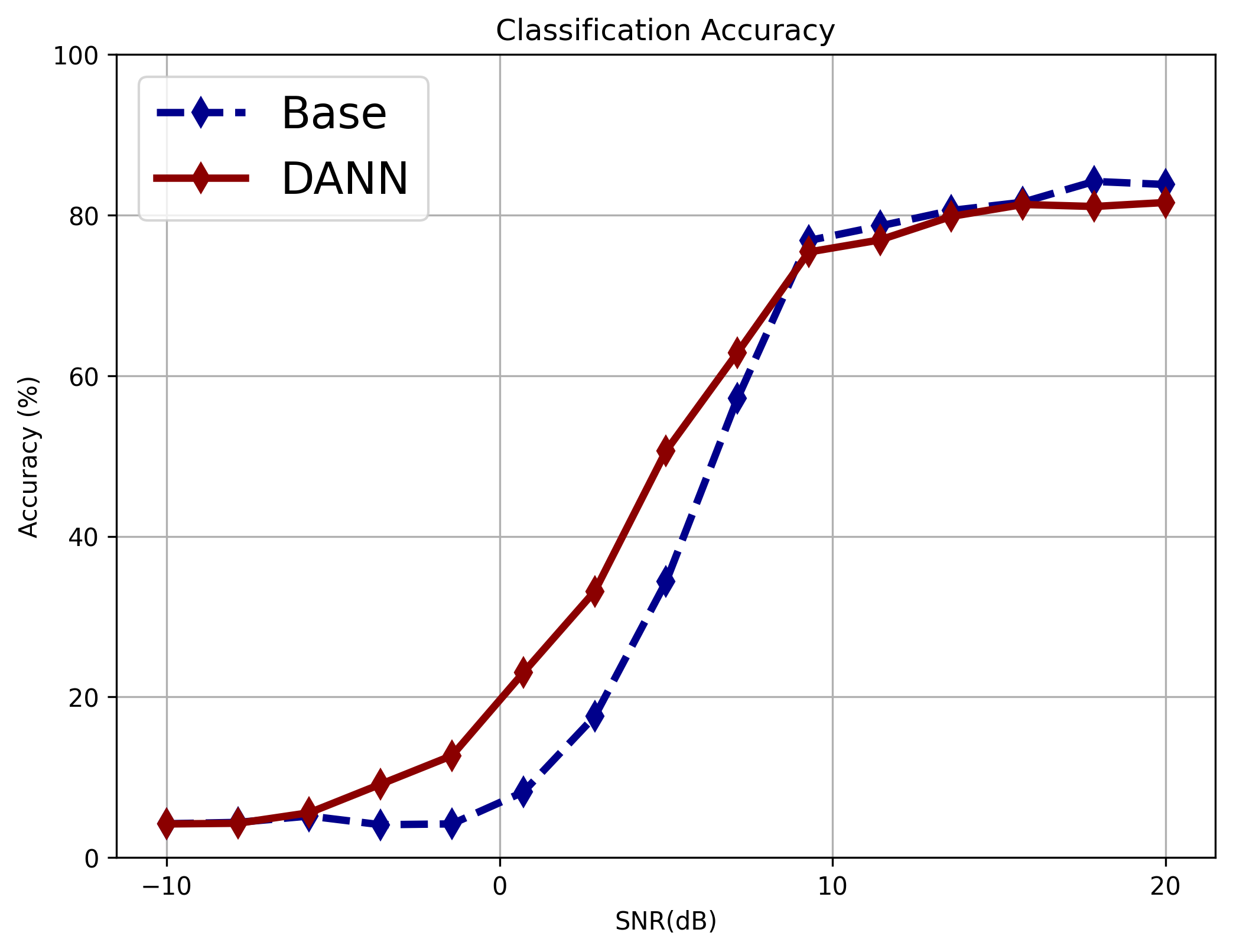

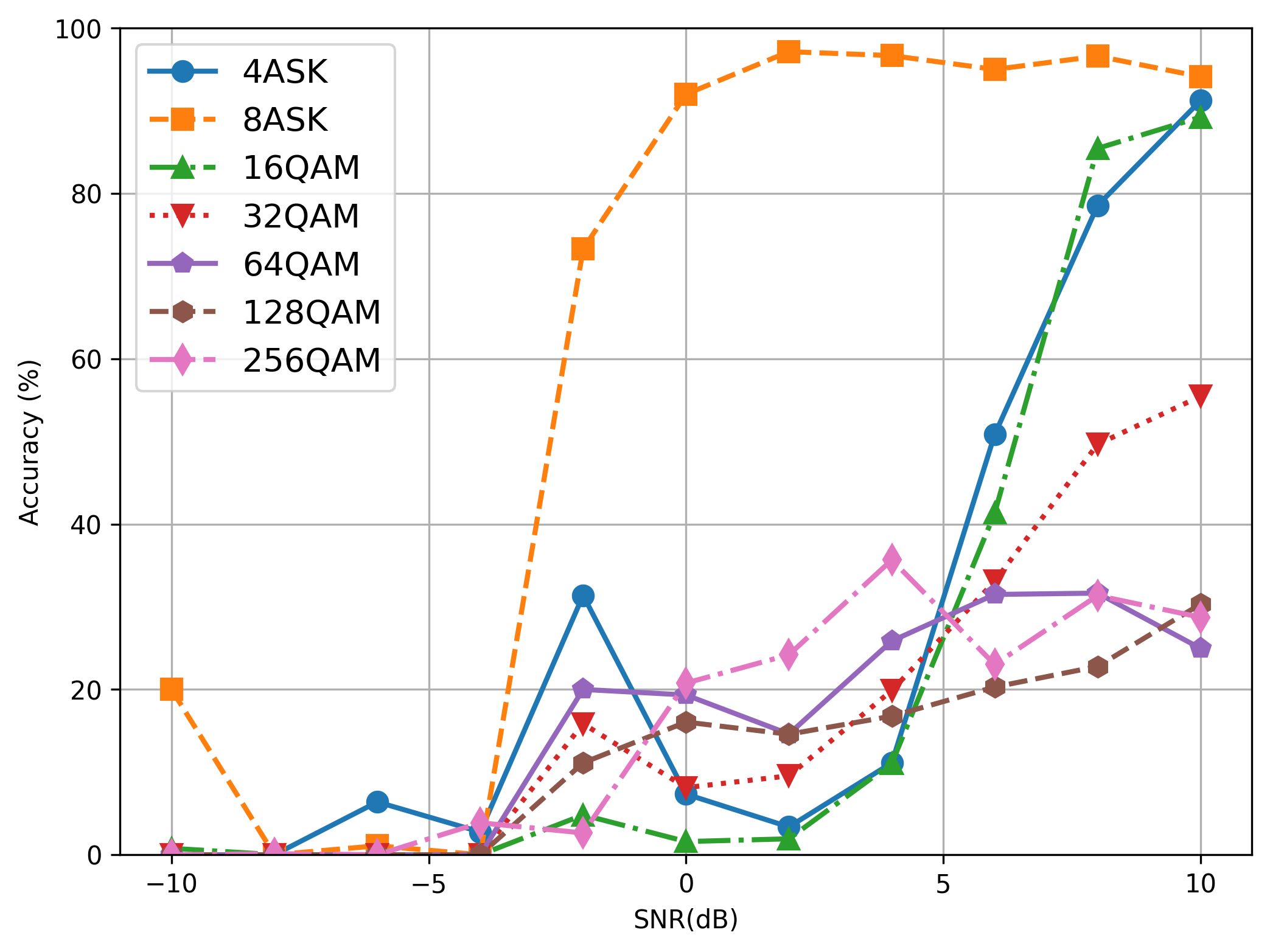

This paper focuses on Automatic Modulation Recognition (AMR), a crucial technology for modern communication systems that enables automatic identification of modulation schemes. Traditional AMR methods rely on feature-based techniques like higher-order statistics and cyclostationary analysis. However, as signal complexity increases, Deep Learning (DL)-based methods, particularly CNNs and RNNs, have shown superior performance by extracting complex patterns from I/Q samples. Despite these advancements, domain variability due to SNR fluctuations significantly impacts model accuracy in real-world applications. To address this, the paper proposes a Domain-Adversarial Neural Network (DANN)-based AMR framework, which aligns feature distributions across different SNR levels to mitigate domain shifts and enhance model robustness. Experimental results show up to 16% accuracy improvement in low to moderate SNR conditions while maintaining performance in other SNR rangesProblem Formulation

AMR models struggle with domain shifts caused by SNR variability, leading to performance degradation when applying a model trained on a source domain to a target domain. Formally, given:

- Feature space \( X \) and modulation class labels \( Y \),

- A classifier function \( f: X \to Y \),

- Source domain \( D_s \) with feature distribution \( P_s(X) \) and label distribution \( P_s(Y|X) \),

- Target domain \( D_t \) with feature distribution \( P_t(X) \) and label distribution \( P_t(Y|X) \),

The domain shift is quantified as:

$$\delta(X) = \| P_s(X) - P_t(X) \|.$$

This shift causes a performance drop, measured as:

$$\Delta A = A_{D_s} - A_{D_t}.$$

Thus, an effective AMR framework should align feature distributions \( P_s(X) \) and \( P_t(X) \) to minimize \( \delta(X) \) and reduce \( \Delta A \), ensuring consistent performance across SNR variations.

DANN tackles domain bias by learning from labeled source data while ensuring low expected loss on unlabeled target data. The DANN framework consists of:

- Feature Extractor – Learns domain-invariant representations.

- Domain Classifier – Distinguishes between source and target domain samples.

- Label Predictor – Maps learned features to modulation labels.

A key component is the gradient reversal layer, which reverses gradients during backpropagation to encourage domain-invariant feature learning. This adversarial approach enables robust generalization across SNR variations, improving AMR performance in real-world settings.

Domain Adversary With Varying SNR

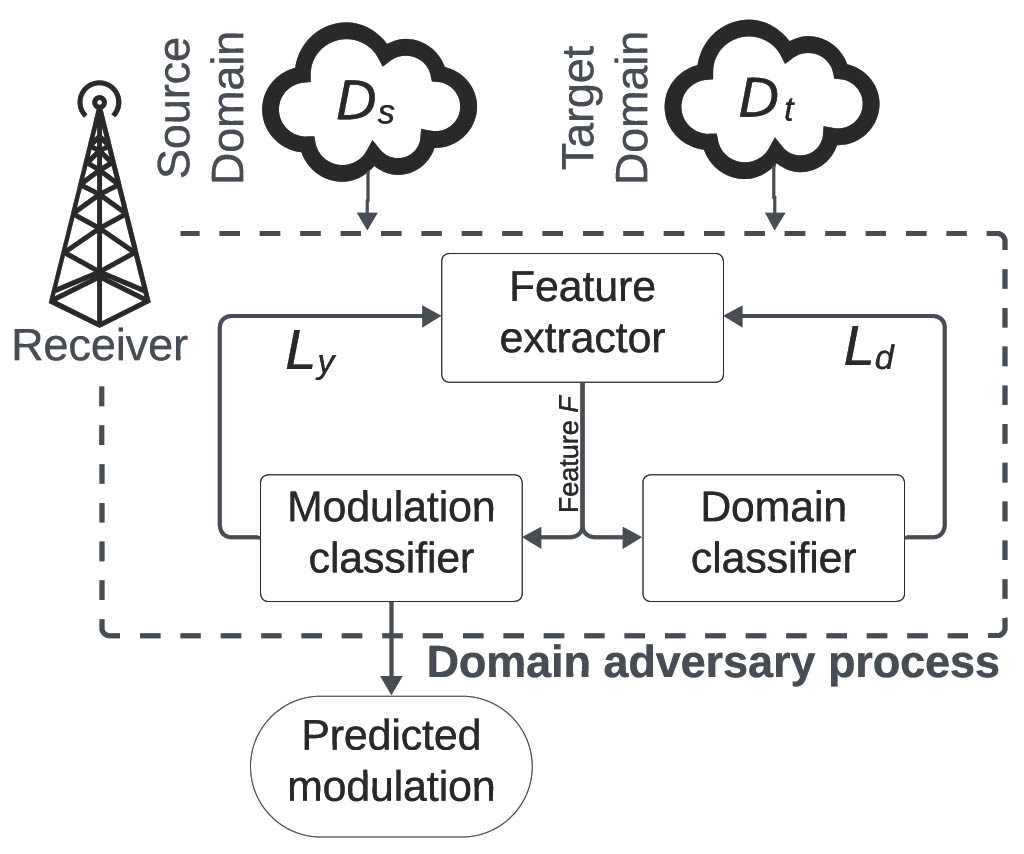

To address the issue of SNR variability in AMR, we propose a domain adversarial AMR learning framework. The overview of the proposed framework is illustrated in Fig. 1. Specifically, it consists of a pair of classifiers: a modulation classifier and a domain classifier. The goal is to learn a feature representation that is invariant to domain shifts caused by different SNR levels, thereby improving the robustness of the modulation recognition system.

In this approach, we define a feature extractor \( G_f(X; \theta_f) \), parameterized by \( \theta_f \), which maps the input \( X \) to a feature representation \( F \). The label predictor \( G_y(F; \theta_y) \), parameterized by \( \theta_y \), takes the feature representation \( F \) and predicts the modulation class label \( Y \). Additionally, a domain classifier \( G_d(F; \theta_d) \), parameterized by \( \theta_d \), predicts the domain label \( D \) (i.e., source or target domain). The framework is designed to minimize the modulation classification loss while maximizing the domain classification loss, thus learning features that are discriminative for modulation recognition but invariant to domain shifts (i.e., varying SNR levels).

The modulation classification loss \( \mathcal{L}_y \) is defined as follows to ensure that the features are useful for predicting the modulation class labels:

$$\mathcal{L}_y(\theta_f, \theta_y) = \mathbb{E}_{(X,Y) \sim P_s} \left[\ell_y\big(G_y(G_f(x; \theta_f); \theta_y),Y\big)\right]$$

where \( \ell_y \) is the cross-entropy loss for modulation classification.

The domain classification loss \( \mathcal{L}_d \) is needed to enable indistinguishable features between the source and target domains:

$$\mathcal{L}_d(\theta_f, \theta_d) = \mathbb{E}_{X \sim P_s} \left[\ell_d\big(G_d(G_f(X; \theta_f); \theta_d), 0\big)\right] + \mathbb{E}_{X \sim P_t} \left[\ell_d\big(G_d(G_f(X; \theta_f); \theta_d), 1\big)\right]$$

where \( \ell_d \) is the cross-entropy loss for binary classification on domains, with domain labels 0 for the source domain and 1 for the target domain.

The objective of the domain adversarial learning in such a DANN setup is to jointly optimize the feature extractor and the label predictor to minimize the modulation classification loss while optimizing the feature extractor to maximize the domain classification loss. Mathematically, the objective function is:

$$\min_{\theta_f, \theta_y} \max_{\theta_d} \mathcal{L}_y(\theta_f, \theta_y) - \lambda \mathcal{L}_d(\theta_f, \theta_d)$$

where \( \lambda \) is a trade-off parameter that balances the modulation classification loss and the domain classification loss.

The training procedure involves several steps:

- Modulation features are extracted from the input data using the feature extractor \( G_f \).

- The modulation class labels are predicted using the modulation label predictor \( G_y \), and the modulation classification loss \( \mathcal{L}_y \) is computed.

- The domain labels are predicted using the domain classifier \( G_d \), and the domain classification loss \( \mathcal{L}_d \) is computed.

- The parameters \( \theta_f \) and \( \theta_y \) are updated to minimize the loss \( \mathcal{L}_y \).

- Meanwhile, the loss \( -\mathcal{L}_d \) is minimized via joint updates on \( \theta_f \) and \( \theta_d \).

By incorporating domain adversarial training, the proposed framework enhances the ability of AMR models to generalize across different SNR conditions, improving their real-world applicability.

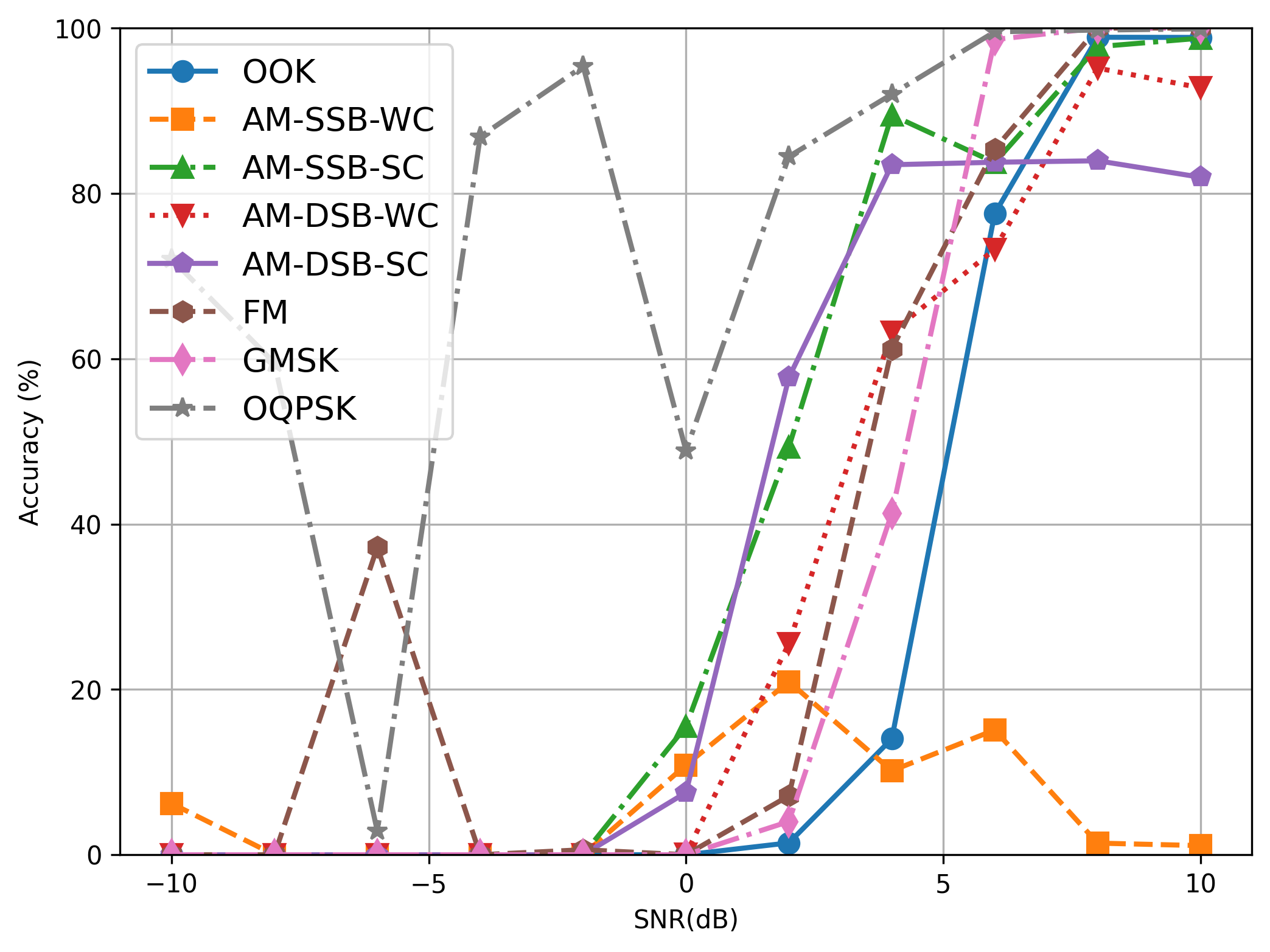

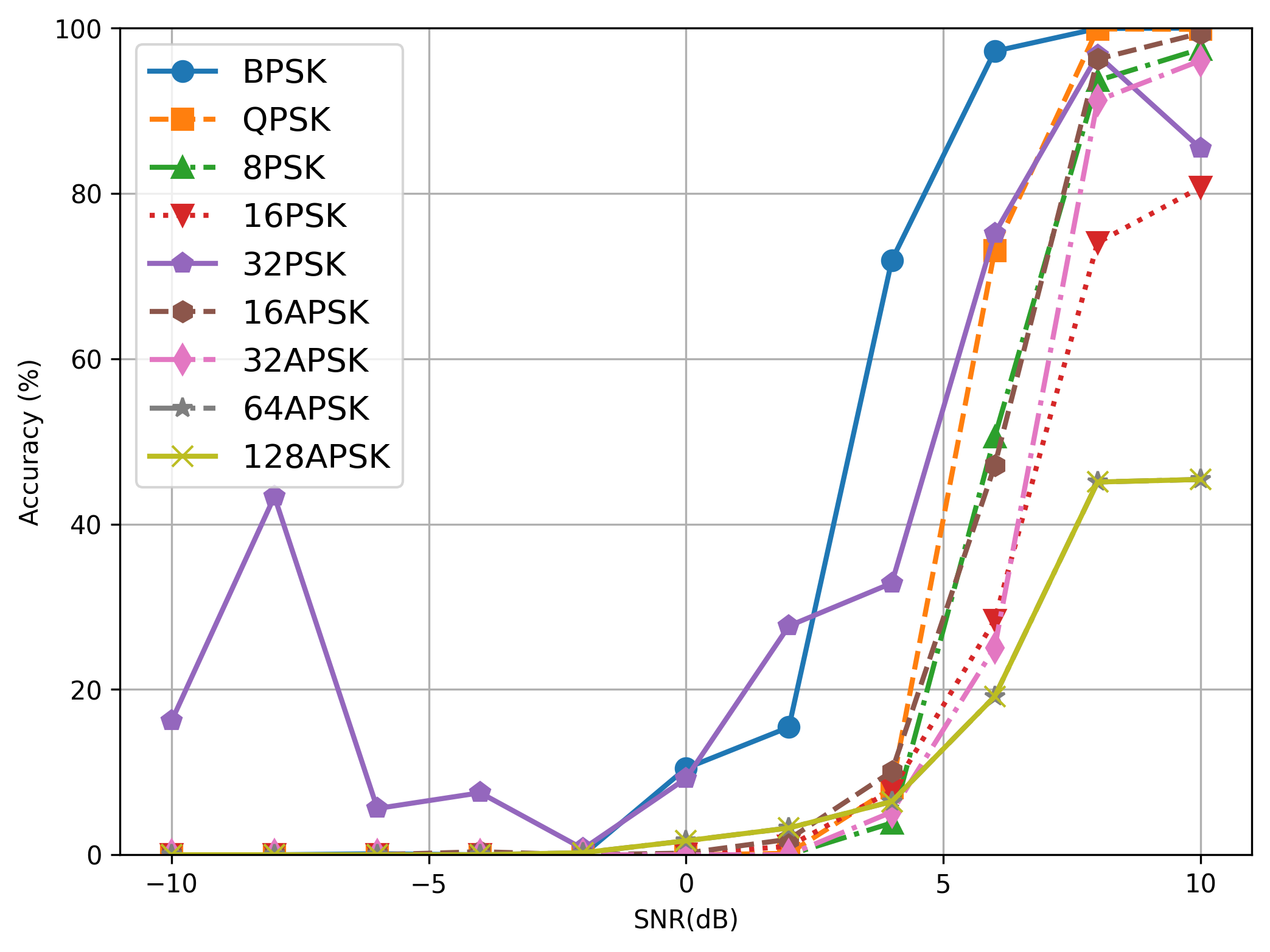

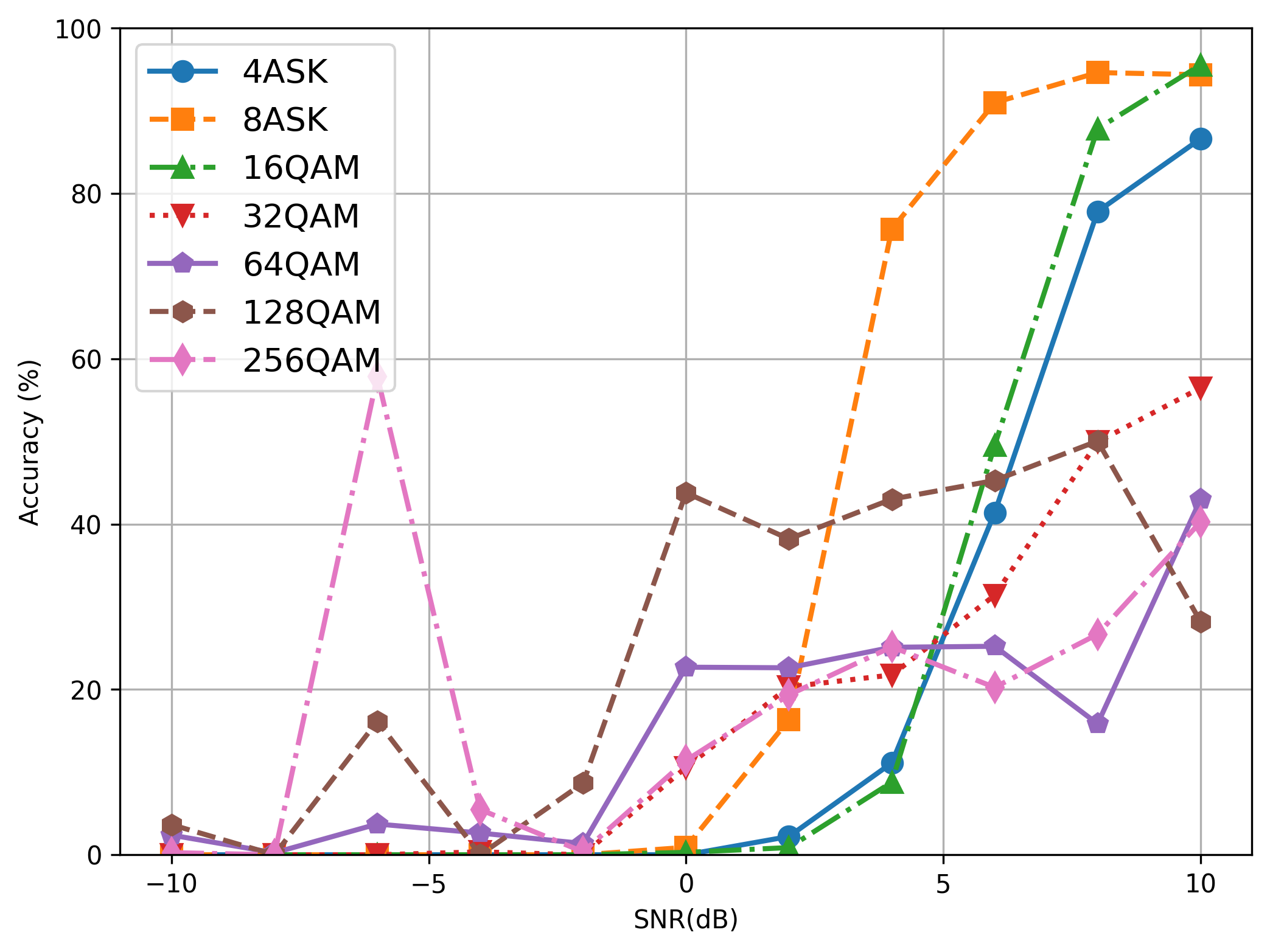

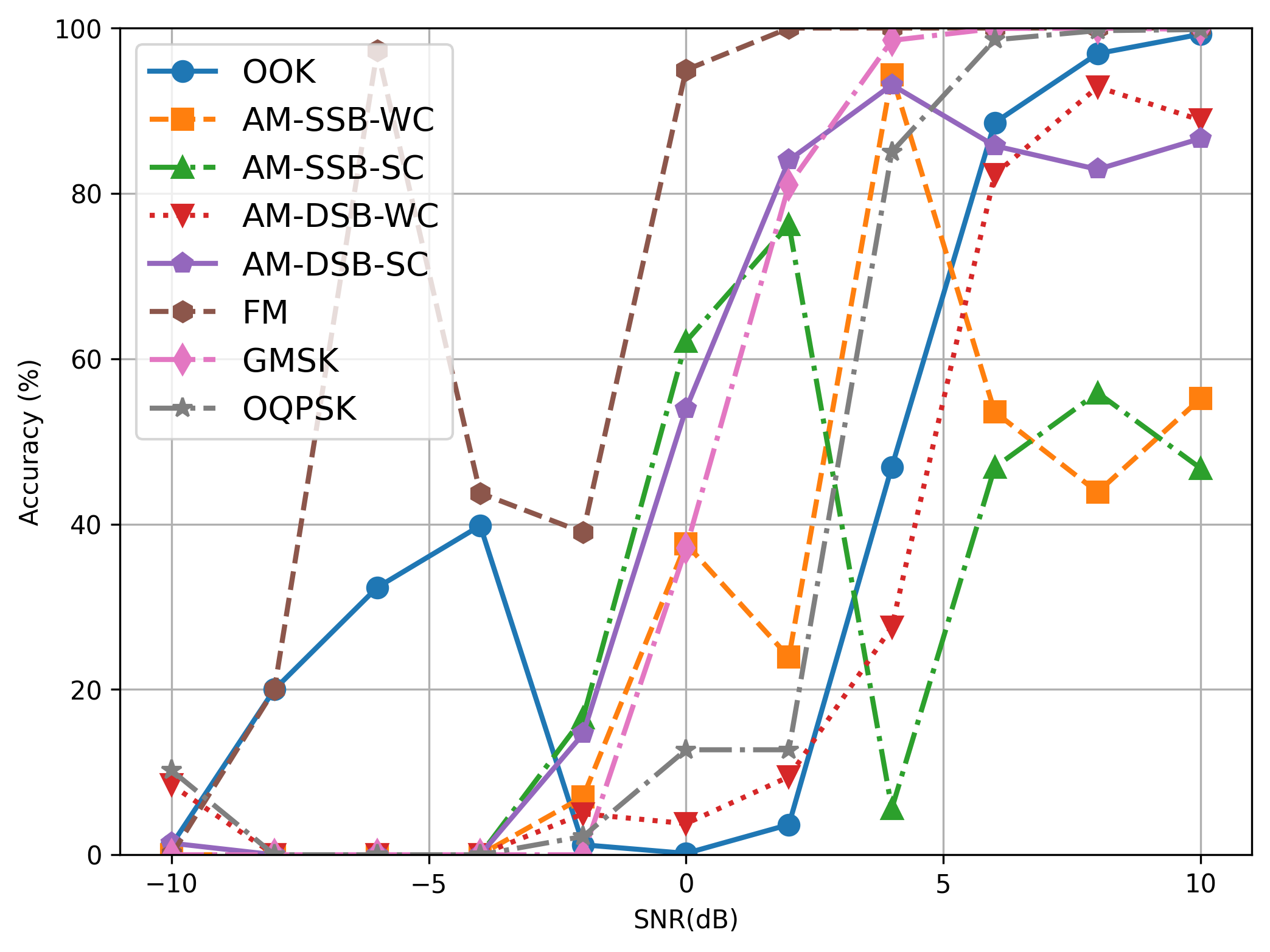

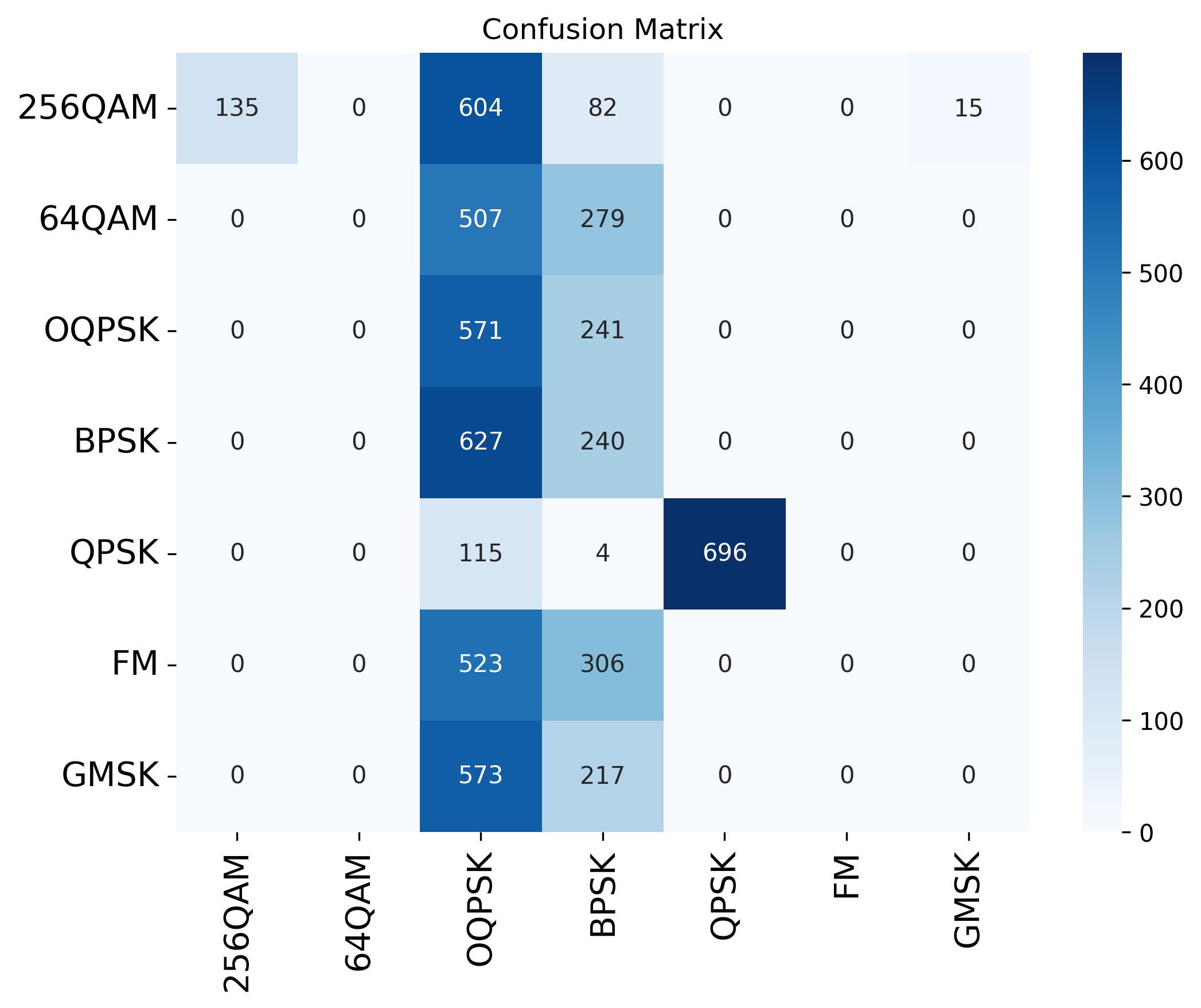

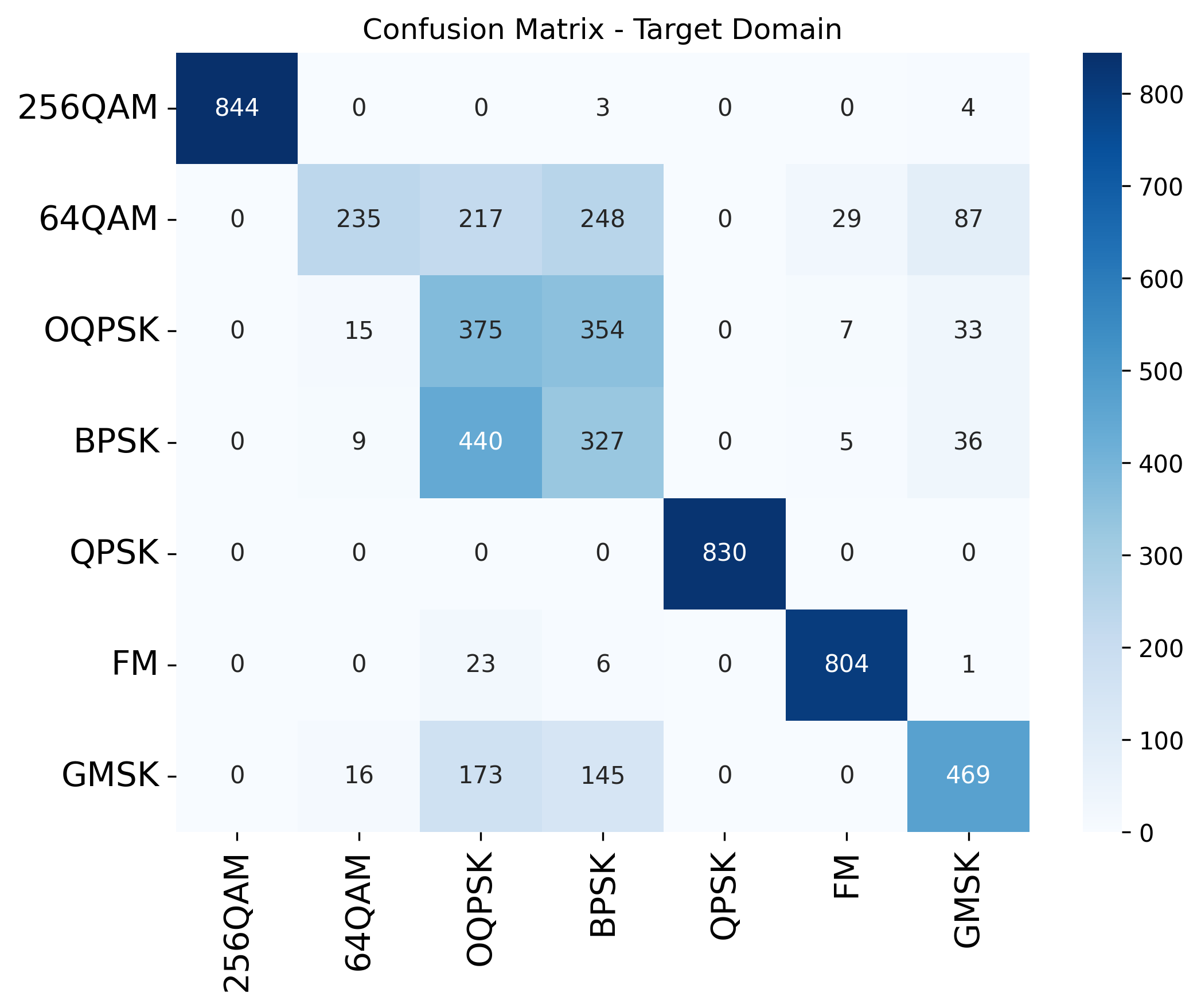

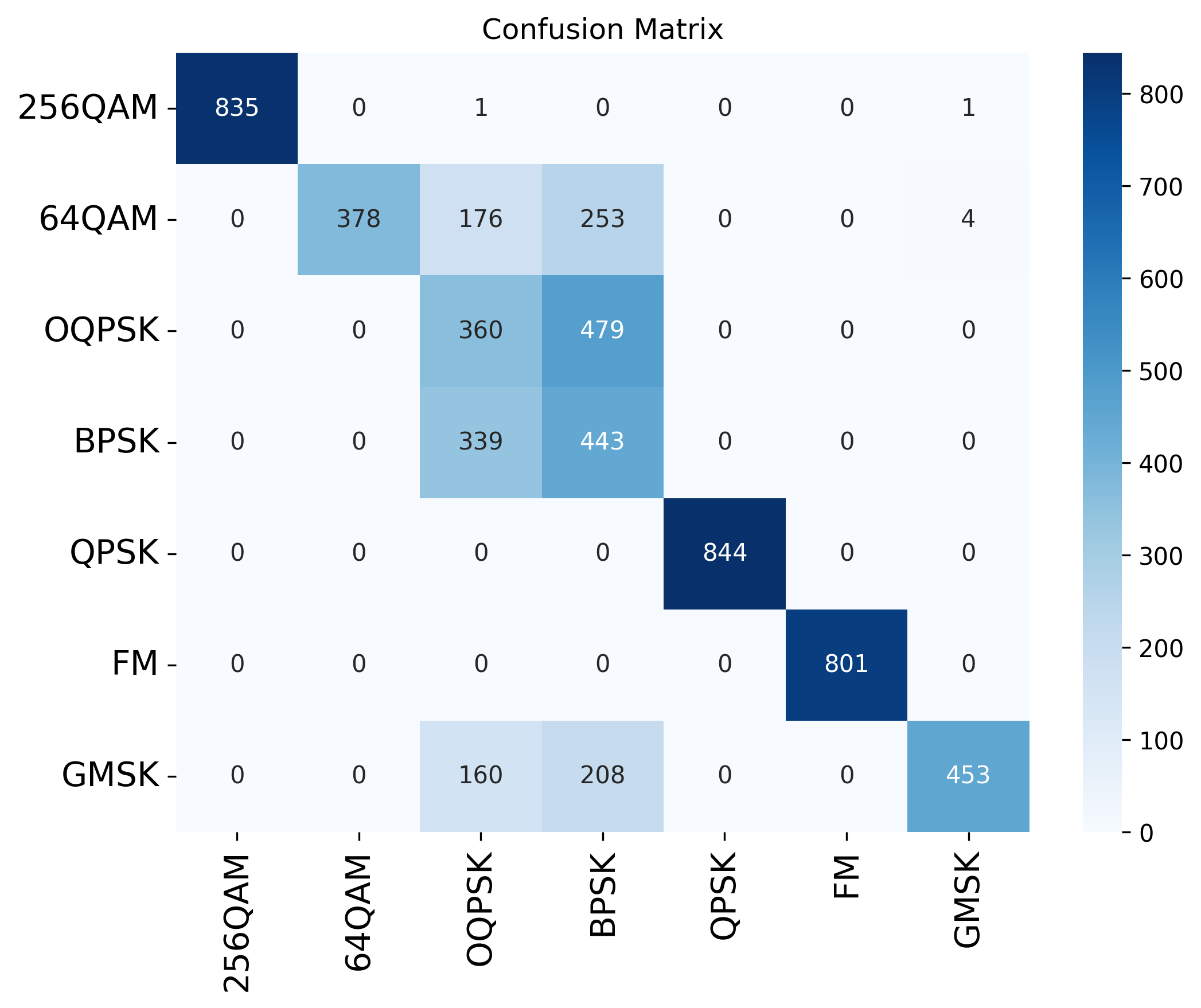

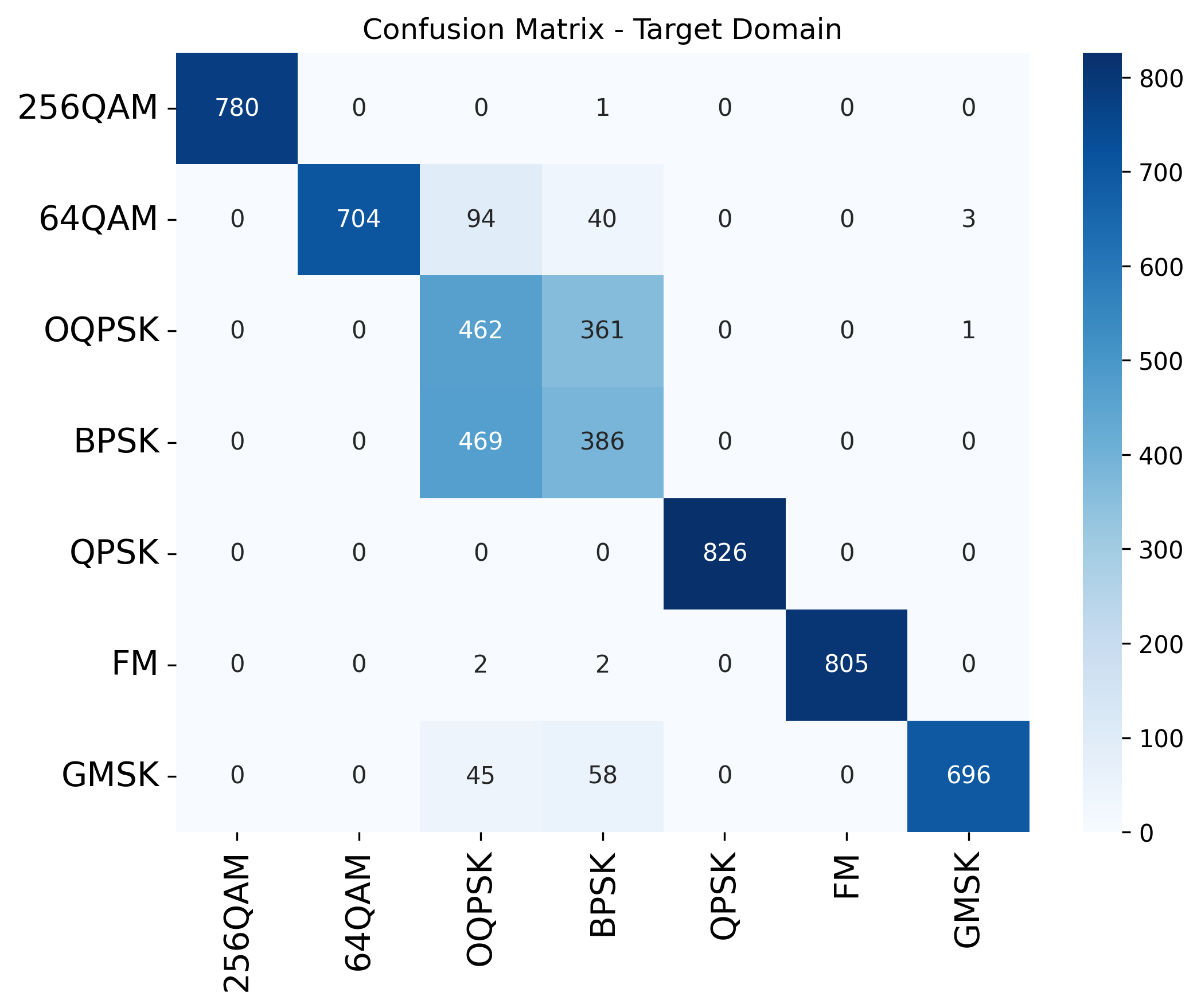

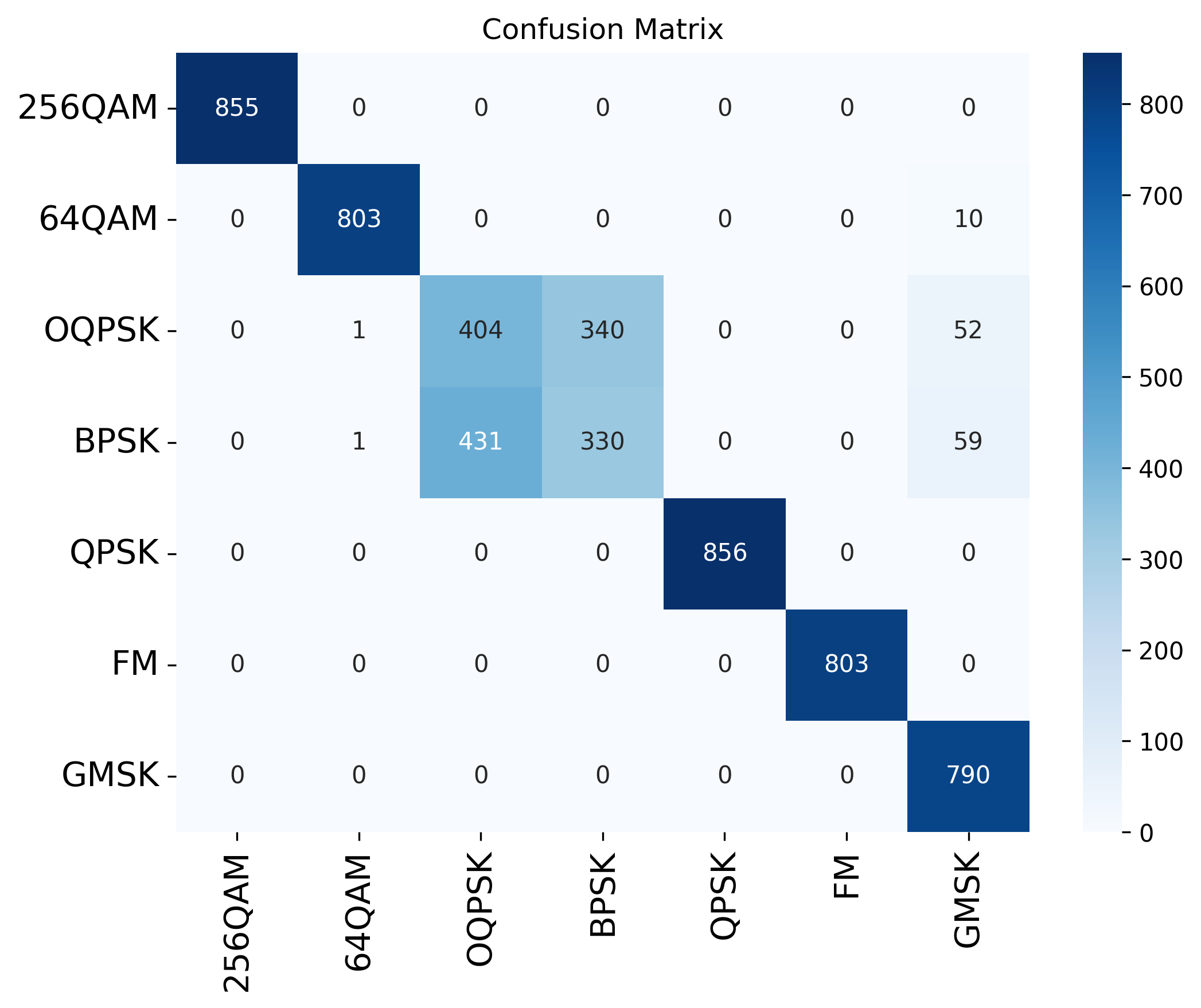

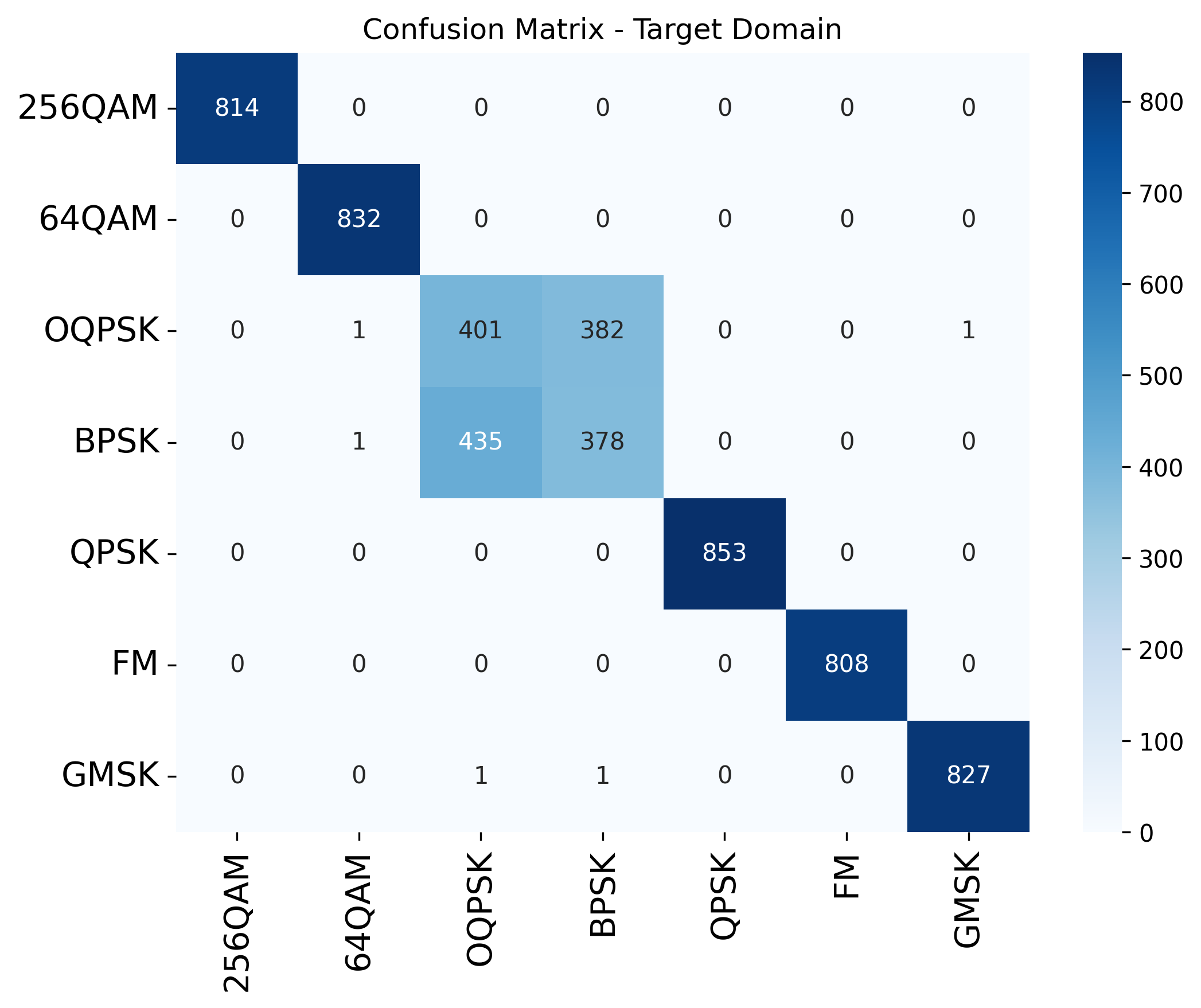

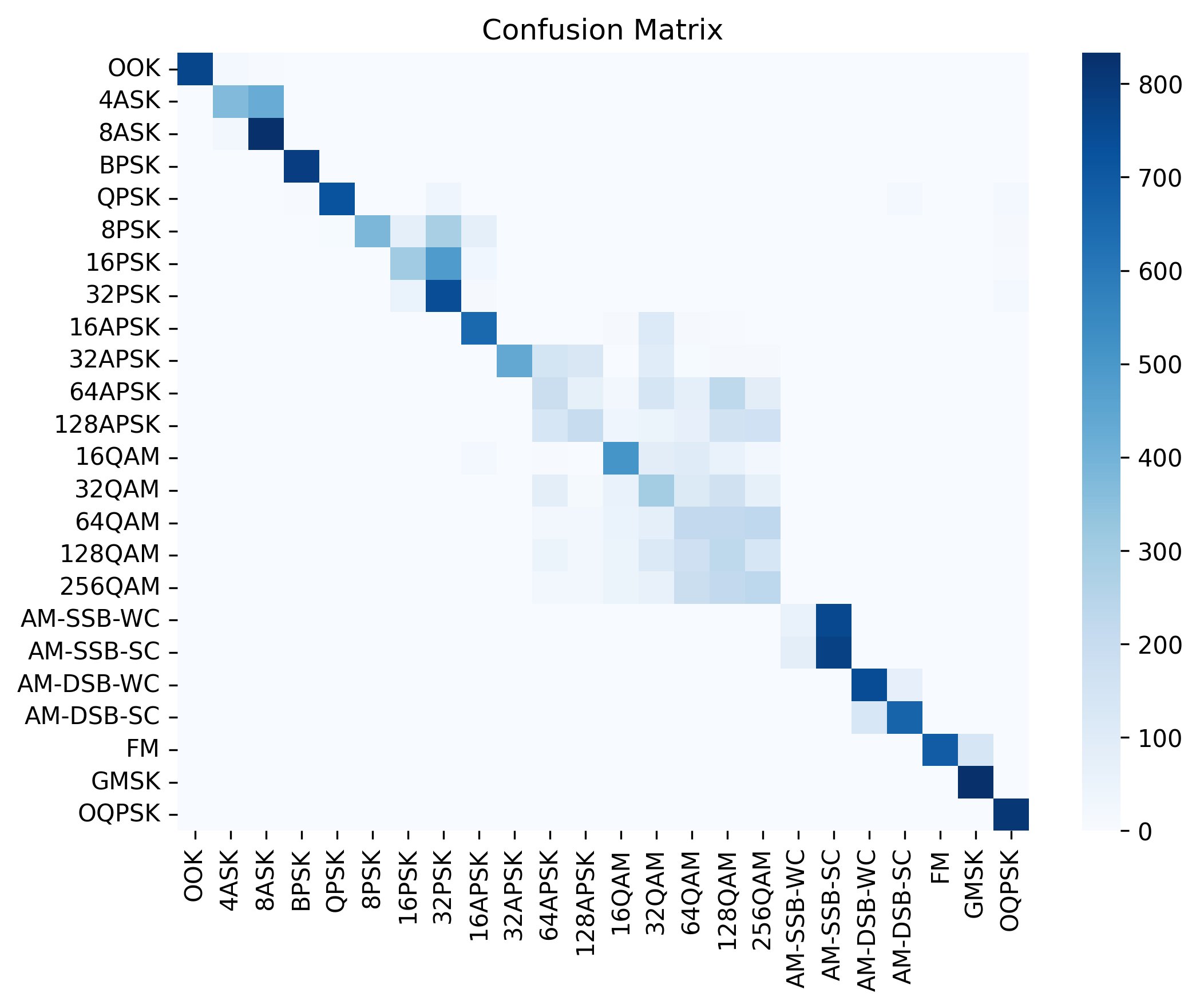

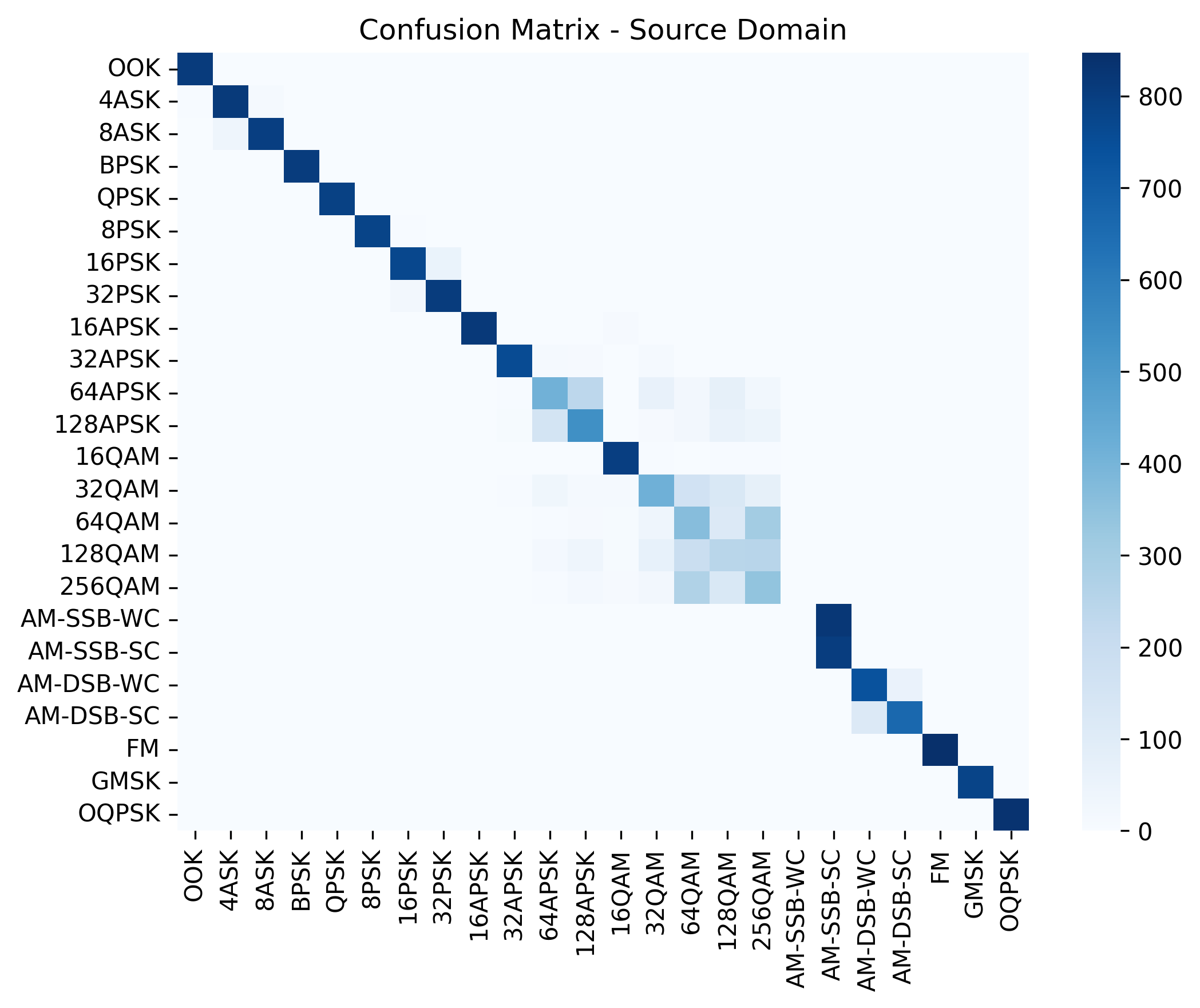

Experimental Results

Conclusion

In conclusion, this paper addresses the challenges of domain variability in AMR due to fluctuations in SNR. Traditional methods tend to struggle under real-world conditions with variable SNR levels. We proposed a novel AMR framework leveraging domain adversarial learning to mitigate domain shifts caused by SNR variability, enhancing AMR model robustness. Extensive experiments show our DANN-based framework improves classification accuracy by up to 16% in low to moderate SNR environments while maintaining performance in extreme SNR conditions. This significantly enhances the resilience and generalizability of AMR systems, making them more suitable for real-world deployment. Future research will focus on refining domain adaptation techniques and implementing testbeds to further evaluate and enhance the model performance in real-world environments.