Explainable Artificial Intelligence Enabled Intrusion Detection in the Internet of Things

Introduction

The Internet of Things (IoT) has revolutionized connectivity, enabling automation across various environments such as smart homes, industries, and cities. IoT devices continuously collect data and provide real-time adjustments, enhancing efficiency. However, this connectivity introduces significant cybersecurity challenges due to the expanding attack surface and diverse device landscape. Traditional security measures, including firewalls, authentication, and encryption, are often insufficient against evolving IoT threats. Intrusion Detection Systems (IDS) play a critical role in network security by monitoring network traffic and detecting malicious activity. The integration of Artificial Intelligence (AI) has further improved IDS effectiveness through Machine Learning (ML) and Deep Learning (DL) models, which analyze patterns to identify cyber threats. Despite their success, AI-based IDS operate as black-box systems, making their decision-making process opaque. Explainable Artificial Intelligence (XAI) techniques, such as Local Interpretable Model-agnostic Explanations (LIME) and Shapley Additive Explanations (SHAP), have been explored to increase transparency by revealing how AI models classify intrusions. These methods help users understand and trust AI-driven security decisions, yet there remains a need for further refinement in their interpretability.

Method

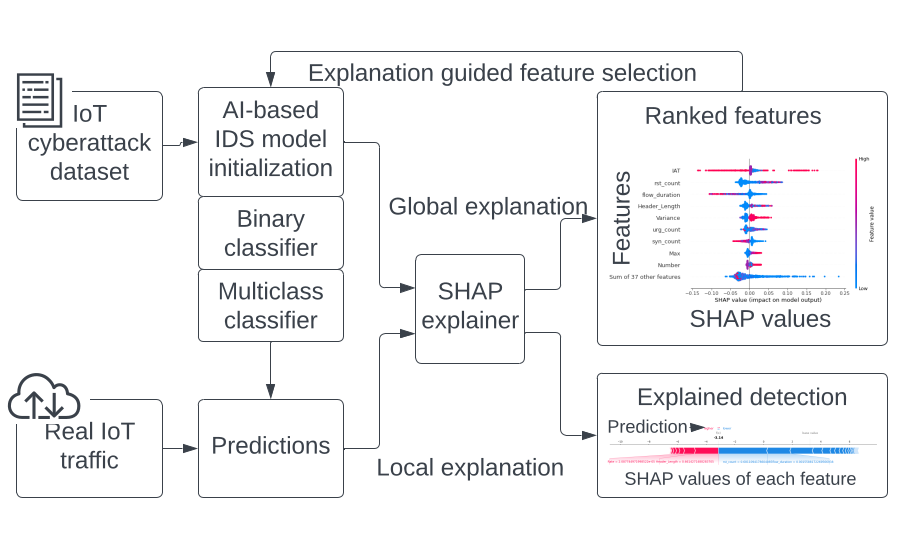

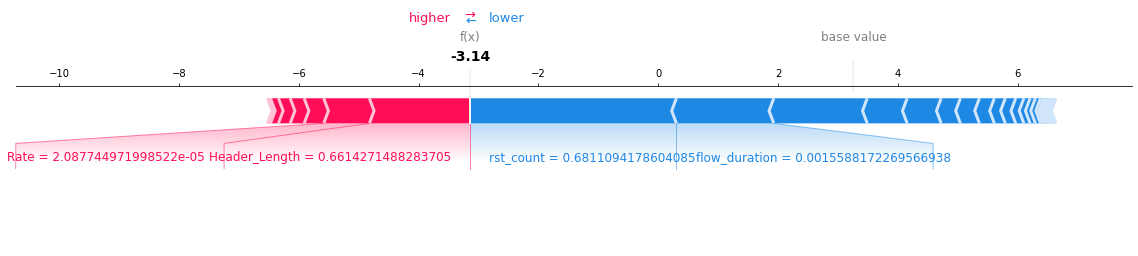

The proposed framework enhances the interpretability of AI-based IDS in IoT networks by integrating SHAP explanations. It consists of two primary components: a conventional IDS structure and a SHAP-based model explainer. The IDS employs binary and multiclass classifiers to detect and categorize cyberattacks, while the explainer module provides feature attribution insights, aiding cybersecurity professionals in refining IDS models. SHAP assigns importance scores to features by decomposing a model’s prediction into contributions from each input variable. Given a model \( f(\cdot) \) and an explainer model \( g(\cdot) \), the explanation output is defined as:

$$ g(x') = \phi_0 +\sum_{i = 1}^{M}{\phi_i x_i'} $$

where \( \phi_i \) represents the importance of feature \( i \), and \( M \) is the number of features considered. SHAP values are computed as:

$$ \phi_i(f, x) = \sum_{S\subseteq N\backslash \{i\}}{\frac{|S|!(M-|S|-1)!}{M!} [f_x(S\cup {i}) - f_x(S)]} $$

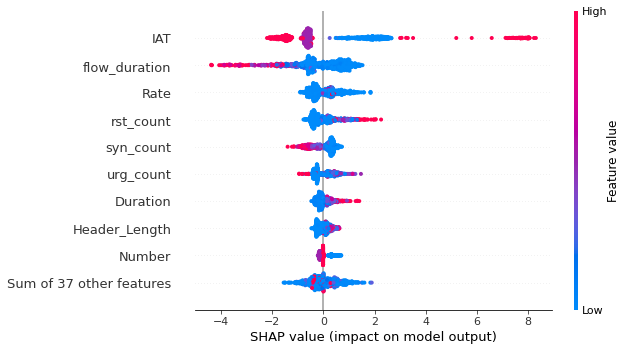

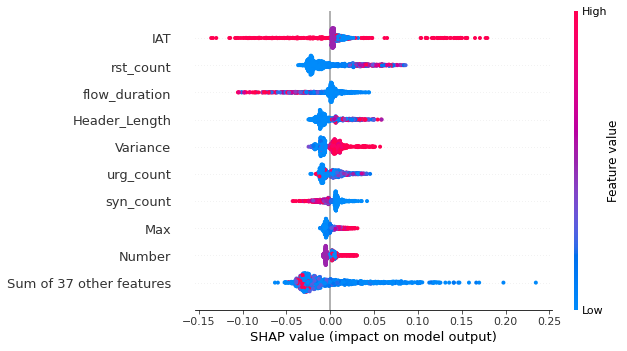

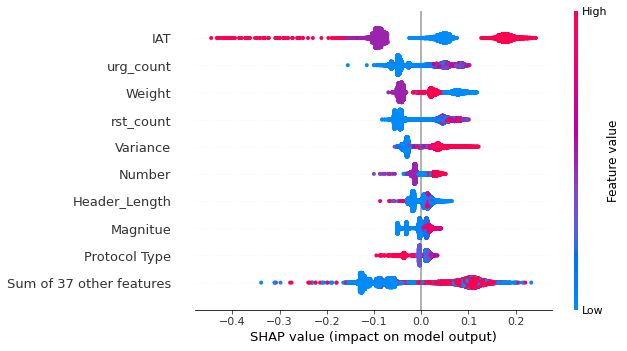

where \( S \) represents a subset of all features, and \( f_x(S) \) is the model output conditioned on those features. Local explanations analyze individual predictions through decision plots, while global explanations aggregate SHAP values to show overall feature importance.

A new IDS model is constructed by selecting critical features based on SHAP attributions. Features are ranked using the mean absolute SHAP value:

$$ \phi_i{(x)} = \frac{1}{|X|} \sum_{j=1}^{|X|} |\phi_i^j| $$

The top-ranked features are used to create a reduced dataset, which is then employed to train a refined IDS model. This guided approach ensures the selection of the most influential features, improving IDS efficiency while maintaining high detection accuracy.

Algorithm

1. Input: Original dataset {X, y}, Pre-trained AI model, Number of features to select (N)

2. Compute SHAP values:

Use the SHAP library or a suitable SHAP implementation to compute SHAP values for each feature in the dataset.

3. Rank features by importance:

Calculate the average absolute SHAP value for each feature using:

$$\phi_i{(x)} = \frac{1}{|X|} \sum_{j=1}^{|X|} |\phi_i^j|$$

Sort the features in descending order based on their average absolute SHAP values.

4. Create new dataset using the top N features:

Select the top N features from the sorted list.

Create a new dataset using only the N most important features from the original dataset:

$$X_{\text{new}} = \{f_i \in X \mid \text{FeatureRank}(f_i) \leq N\}$$

5. Train the new IDS model:

Split the new dataset into training and testing sets.

Choose an appropriate machine learning algorithm or model architecture.

Train the new AI model using the training data containing only the top N features.

6. Evaluate the new IDS model:

Evaluate the performance of the AI model using the testing data.

Quantify the performance using evaluation metrics (accuracy, recall, precision, etc.).

Fine-tune hyperparameters if performance is unsatisfactory.

Experimental Results

Conclusion

The integration of IoT into our daily lives has been seamless. However, due to the diverse nature of IoT networks, they are more vulnerable to cyber threats. To overcome this vulnerability, AI and IDS have emerged as effective tools for countering IoT cyberattacks. It is important to have a deeper understanding of their architecture and the ability to explain predictions for validating and analyzing potential cyber threats. Despite XAI has garnered significant interest, its application in IoT cybersecurity still requires thorough investigation to better understand its effectiveness in identifying attack surfaces and vectors. In this paper, we introduce a framework for explainable intrusion detection in IoT. We have developed several IDS using varying AI techniques, e.g., Random Forest and Multilayer Perceptron. The implemented models are deployed to detect cyberattacks, and SHAP is applied to provide explanations for the model decisions. The SHAP explanations are examined by building new detection models using a subset of features suggested by the explanation results. We evaluated our framework using the newly released CICIoT2023 dataset. The evaluation results demonstrate that the proposed framework can assist decision-makers in comprehending complicated attack behaviors. In the future, we will apply other XAI techniques to explain the decisions made by AI-based IDS. Moreover, we will use other real-world datasets in evaluation to avoid the loss of generality.